Compare commits

37 commits

master

...

slf/chain-

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

94e4eac12c | ||

|

|

54851488cc | ||

|

|

e0c6aeac94 | ||

|

|

f2ee7b5045 | ||

|

|

fab03a90c6 | ||

|

|

f89c9c43d3 | ||

|

|

2f16a3a944 | ||

|

|

7817d7c160 | ||

|

|

6566420e32 | ||

|

|

8ff177f0c5 | ||

|

|

666a05b50b | ||

|

|

8cdf69c8b9 | ||

|

|

f129801f29 | ||

|

|

2fe2a23c79 | ||

|

|

6ed7293b02 | ||

|

|

b8063c9575 | ||

|

|

b558de2244 | ||

|

|

4e01f5bac0 | ||

|

|

cf56e2d388 | ||

|

|

cebb205b52 | ||

|

|

6e4e2ac6bf | ||

|

|

fbdf606128 | ||

|

|

938f3c16f2 | ||

|

|

24fc2a773c | ||

|

|

77f16523f4 | ||

|

|

07e477598a | ||

|

|

ecd56a194e | ||

|

|

187ed6ddce | ||

|

|

b03f88de43 | ||

|

|

2ae8a42d07 | ||

|

|

50dd83919a | ||

|

|

35c9f41cf4 | ||

|

|

4f28191552 | ||

|

|

1488587dd9 | ||

|

|

0b4e106635 | ||

|

|

27f17e88ad | ||

|

|

f4a2a453bd |

32

.gitignore

vendored

|

|

@ -1,33 +1,9 @@

|

|||

prototype/chain-manager/patch.*

|

||||

.eqc-info

|

||||

.eunit

|

||||

deps

|

||||

dev

|

||||

*.o

|

||||

ebin/*.beam

|

||||

*.plt

|

||||

erl_crash.dump

|

||||

eqc

|

||||

rel/example_project

|

||||

.concrete/DEV_MODE

|

||||

.rebar

|

||||

edoc

|

||||

|

||||

# Dialyzer stuff

|

||||

.dialyzer-last-run.txt

|

||||

.ebin.native

|

||||

.local_dialyzer_plt

|

||||

dialyzer_unhandled_warnings

|

||||

dialyzer_warnings

|

||||

*.plt

|

||||

|

||||

# PB artifacts for Erlang

|

||||

include/machi_pb.hrl

|

||||

|

||||

# Release packaging

|

||||

rel/machi

|

||||

rel/vars/dev*vars.config

|

||||

|

||||

# Misc Scott cruft

|

||||

*.patch

|

||||

current_counterexample.eqc

|

||||

foo*

|

||||

RUNLOG*

|

||||

typescript*

|

||||

*.swp

|

||||

|

|

|

|||

|

|

@ -1,7 +0,0 @@

|

|||

language: erlang

|

||||

notifications:

|

||||

email: scott@basho.com

|

||||

script: "priv/test-for-gh-pr.sh"

|

||||

otp_release:

|

||||

- 17.5

|

||||

## No, Dialyzer is too different between 17 & 18: - 18.1

|

||||

|

|

@ -1,35 +0,0 @@

|

|||

# Contributing to the Machi project

|

||||

|

||||

The most helpful way to contribute is by reporting your experience

|

||||

through issues. Issues may not be updated while we review internally,

|

||||

but they're still incredibly appreciated.

|

||||

|

||||

Pull requests may take multiple engineers for verification and testing. If

|

||||

you're passionate enough to want to learn more on how you can get

|

||||

hands on in this process, reach out to

|

||||

[Matt Brender](mailto:mbrender@basho.com), your developer advocate.

|

||||

|

||||

Thank you for being part of the community! We love you for it.

|

||||

|

||||

## If you have a question or wish to provide design feedback/criticism

|

||||

|

||||

Please

|

||||

[open a support ticket at GitHub](https://github.com/basho/machi/issues/new)

|

||||

to ask questions and to provide feedback about Machi's

|

||||

design/documentation/source code.

|

||||

|

||||

## General development process

|

||||

|

||||

Machi is still a very young project within Basho, with a small team of

|

||||

developers; please bear with us as we grow out of "toddler" stage into

|

||||

a more mature open source software project.

|

||||

|

||||

* Fork the Machi source repo and/or the sub-projects that are affected

|

||||

by your change.

|

||||

* Create a topic branch for your change and checkout that branch.

|

||||

git checkout -b some-topic-branch

|

||||

* Make your changes and run the test suite if one is provided.

|

||||

* Commit your changes and push them to your fork.

|

||||

* Open pull-requests for the appropriate projects.

|

||||

* Contributors will review your pull request, suggest changes, and merge it when it’s ready and/or offer feedback.

|

||||

* To report a bug or issue, please open a new issue against this repository.

|

||||

630

FAQ.md

|

|

@ -1,630 +0,0 @@

|

|||

# Frequently Asked Questions (FAQ)

|

||||

|

||||

<!-- Formatting: -->

|

||||

<!-- All headings omitted from outline are H1 -->

|

||||

<!-- All other headings must be on a single line! -->

|

||||

<!-- Run: ./priv/make-faq.pl ./FAQ.md > ./tmpfoo; mv ./tmpfoo ./FAQ.md -->

|

||||

|

||||

# Outline

|

||||

|

||||

<!-- OUTLINE -->

|

||||

|

||||

+ [1 Questions about Machi in general](#n1)

|

||||

+ [1.1 What is Machi?](#n1.1)

|

||||

+ [1.2 What is a Machi chain?](#n1.2)

|

||||

+ [1.3 What is a Machi cluster?](#n1.3)

|

||||

+ [1.4 What is Machi like when operating in "eventually consistent" mode?](#n1.4)

|

||||

+ [1.5 What is Machi like when operating in "strongly consistent" mode?](#n1.5)

|

||||

+ [1.6 What does Machi's API look like?](#n1.6)

|

||||

+ [1.7 What licensing terms are used by Machi?](#n1.7)

|

||||

+ [1.8 Where can I find the Machi source code and documentation? Can I contribute?](#n1.8)

|

||||

+ [1.9 What is Machi's expected release schedule, packaging, and operating system/OS distribution support?](#n1.9)

|

||||

+ [2 Questions about Machi relative to {{something else}}](#n2)

|

||||

+ [2.1 How is Machi better than Hadoop?](#n2.1)

|

||||

+ [2.2 How does Machi differ from HadoopFS/HDFS?](#n2.2)

|

||||

+ [2.3 How does Machi differ from Kafka?](#n2.3)

|

||||

+ [2.4 How does Machi differ from Bookkeeper?](#n2.4)

|

||||

+ [2.5 How does Machi differ from CORFU and Tango?](#n2.5)

|

||||

+ [3 Machi's specifics](#n3)

|

||||

+ [3.1 What technique is used to replicate Machi's files? Can other techniques be used?](#n3.1)

|

||||

+ [3.2 Does Machi have a reliance on a coordination service such as ZooKeeper or etcd?](#n3.2)

|

||||

+ [3.3 Are there any presentations available about Humming Consensus](#n3.3)

|

||||

+ [3.4 Is it true that there's an allegory written to describe Humming Consensus?](#n3.4)

|

||||

+ [3.5 How is Machi tested?](#n3.5)

|

||||

+ [3.6 Does Machi require shared disk storage? e.g. iSCSI, NBD (Network Block Device), Fibre Channel disks](#n3.6)

|

||||

+ [3.7 Does Machi require or assume that servers with large numbers of disks must use RAID-0/1/5/6/10/50/60 to create a single block device?](#n3.7)

|

||||

+ [3.8 What language(s) is Machi written in?](#n3.8)

|

||||

+ [3.9 Can Machi run on Windows? Can Machi run on 32-bit platforms?](#n3.9)

|

||||

+ [3.10 Does Machi use the Erlang/OTP network distribution system (aka "disterl")?](#n3.10)

|

||||

+ [3.11 Can I use HTTP to write/read stuff into/from Machi?](#n3.11)

|

||||

|

||||

<!-- ENDOUTLINE -->

|

||||

|

||||

<a name="n1">

|

||||

## 1. Questions about Machi in general

|

||||

|

||||

<a name="n1.1">

|

||||

### 1.1. What is Machi?

|

||||

|

||||

Very briefly, Machi is a very simple append-only blob/file store.

|

||||

|

||||

Machi is

|

||||

"dumber" than many other file stores (i.e., lacking many features

|

||||

found in other file stores) such as HadoopFS or a simple NFS or CIFS file

|

||||

server.

|

||||

However, Machi is a distributed blob/file store, which makes it different

|

||||

(and, in some ways, more complicated) than a simple NFS or CIFS file

|

||||

server.

|

||||

|

||||

All Machi data is protected by SHA-1 checksums. By default, these

|

||||

checksums are calculated by the client to provide strong end-to-end

|

||||

protection against data corruption. (If the client does not provide a

|

||||

checksum, one will be generated by the first Machi server to handle

|

||||

the write request.) Internally, Machi uses these checksums for local

|

||||

data integrity checks and for server-to-server file synchronization

|

||||

and corrupt data repair.

|

||||

|

||||

As a distributed system, Machi can be configured to operate with

|

||||

either eventually consistent mode or strongly consistent mode. In

|

||||

strongly consistent mode, Machi can provide write-once file store

|

||||

service in the same style as CORFU. Machi can be an easy to use tool

|

||||

for building fully ordered, log-based distributed systems and

|

||||

distributed data structures.

|

||||

|

||||

In eventually consistent mode, Machi can remain available for writes

|

||||

during arbitrary network partitions. When a network partition is

|

||||

fixed, Machi can safely merge all file data together without data

|

||||

loss. Similar to the operation of

|

||||

Basho's

|

||||

[Riak key-value store, Riak KV](http://basho.com/products/riak-kv/),

|

||||

Machi can provide file writes during arbitrary network partitions and

|

||||

later merge all results together safely when the cluster recovers.

|

||||

|

||||

For a much longer answer, please see the

|

||||

[Machi high level design doc](https://github.com/basho/machi/tree/master/doc/high-level-machi.pdf).

|

||||

|

||||

<a name="n1.2">

|

||||

### 1.2. What is a Machi chain?

|

||||

|

||||

A Machi chain is a small number of machines that maintain a common set

|

||||

of replicated files. A typical chain is of length 2 or 3. For

|

||||

critical data that must be available despite several simultaneous

|

||||

server failures, a chain length of 6 or 7 might be used.

|

||||

|

||||

<a name="n1.3">

|

||||

### 1.3. What is a Machi cluster?

|

||||

|

||||

A Machi cluster is a collection of Machi chains that

|

||||

partitions/shards/distributes files (based on file name) across the

|

||||

collection of chains. Machi uses the "random slicing" algorithm (a

|

||||

variation of consistent hashing) to define the mapping of file name to

|

||||

chain name.

|

||||

|

||||

The cluster management service will be fully decentralized

|

||||

and run as a separate software service installed on each Machi

|

||||

cluster. This manager will appear to the local Machi server as simply

|

||||

another Machi file client. The cluster managers will take

|

||||

care of file migration as the cluster grows and shrinks in capacity

|

||||

and in response to day-to-day changes in workload.

|

||||

|

||||

Though the cluster manager has not yet been implemented,

|

||||

its design is fully decentralized and capable of operating despite

|

||||

multiple partial failure of its member chains. We expect this

|

||||

design to scale easily to at least one thousand servers.

|

||||

|

||||

Please see the

|

||||

[Machi source repository's 'doc' directory for more details](https://github.com/basho/machi/tree/master/doc/).

|

||||

|

||||

<a name="n1.4">

|

||||

### 1.4. What is Machi like when operating in "eventually consistent" mode?

|

||||

|

||||

Machi's operating mode dictates how a Machi cluster will react to

|

||||

network partitions. A network partition may be caused by:

|

||||

|

||||

* A network failure

|

||||

* A server failure

|

||||

* An extreme server software "hang" or "pause", e.g. caused by OS

|

||||

scheduling problems such as a failing/stuttering disk device.

|

||||

|

||||

The consistency semantics of file operations while in eventual

|

||||

consistency mode during and after network partitions are:

|

||||

|

||||

* File write operations are permitted by any client on the "same side"

|

||||

of the network partition.

|

||||

* File read operations are successful for any file contents where the

|

||||

client & server are on the "same side" of the network partition.

|

||||

* File read operations will probably fail for any file contents where the

|

||||

client & server are on "different sides" of the network partition.

|

||||

* After the network partition(s) is resolved, files are merged

|

||||

together from "all sides" of the partition(s).

|

||||

* Unique files are copied in their entirety.

|

||||

* Byte ranges within the same file are merged. This is possible

|

||||

due to Machi's restrictions on file naming and file offset

|

||||

assignment. Both file names and file offsets are always chosen

|

||||

by Machi servers according to rules which guarantee safe

|

||||

mergeability. Server-assigned names are a characteristic of a

|

||||

"blob store".

|

||||

|

||||

<a name="n1.5">

|

||||

### 1.5. What is Machi like when operating in "strongly consistent" mode?

|

||||

|

||||

The consistency semantics of file operations while in strongly

|

||||

consistency mode during and after network partitions are:

|

||||

|

||||

* File write operations are permitted by any client on the "same side"

|

||||

of the network partition if and only if a quorum majority of Machi servers

|

||||

are also accessible within that partition.

|

||||

* In other words, file write service is unavailable in any

|

||||

partition where only a minority of Machi servers are accessible.

|

||||

* File read operations are successful for any file contents where the

|

||||

client & server are on the "same side" of the network partition.

|

||||

* After the network partition(s) is resolved, files are repaired from

|

||||

the surviving quorum majority members to out-of-sync minority

|

||||

members.

|

||||

|

||||

Machi's design can provide the illusion of quorum minority write

|

||||

availability if the cluster is configured to operate with "witness

|

||||

servers". (This feaure partially implemented, as of December 2015.)

|

||||

See Section 11 of

|

||||

[Machi chain manager high level design doc](https://github.com/basho/machi/tree/master/doc/high-level-chain-mgr.pdf)

|

||||

for more details.

|

||||

|

||||

<a name="n1.6">

|

||||

### 1.6. What does Machi's API look like?

|

||||

|

||||

The Machi API only contains a handful of API operations. The function

|

||||

arguments shown below (in simplifed form) use Erlang-style type annotations.

|

||||

|

||||

append_chunk(Prefix:binary(), Chunk:binary(), CheckSum:binary()).

|

||||

append_chunk_extra(Prefix:binary(), Chunk:binary(), CheckSum:binary(), ExtraSpace:non_neg_integer()).

|

||||

read_chunk(File:binary(), Offset:non_neg_integer(), Size:non_neg_integer()).

|

||||

|

||||

checksum_list(File:binary()).

|

||||

list_files().

|

||||

|

||||

Machi allows the client to choose the prefix of the file name to

|

||||

append data to, but the Machi server will always choose the final file

|

||||

name and byte offset for each `append_chunk()` operation. This

|

||||

restriction on file naming makes it easy to operate in "eventually

|

||||

consistent" mode: files may be written to any server during network

|

||||

partitions and can be easily merged together after the partition is

|

||||

healed.

|

||||

|

||||

Internally, there is a more complex protocol used by individual

|

||||

cluster members to manage file contents and to repair damaged/missing

|

||||

files. See Figure 3 in

|

||||

[Machi high level design doc](https://github.com/basho/machi/tree/master/doc/high-level-machi.pdf)

|

||||

for more description.

|

||||

|

||||

The definitions of both the "high level" external protocol and "low

|

||||

level" internal protocol are in a

|

||||

[Protocol Buffers](https://developers.google.com/protocol-buffers/docs/overview)

|

||||

definition at [./src/machi.proto](./src/machi.proto).

|

||||

|

||||

<a name="n1.7">

|

||||

### 1.7. What licensing terms are used by Machi?

|

||||

|

||||

All Machi source code and documentation is licensed by

|

||||

[Basho Technologies, Inc.](http://www.basho.com/)

|

||||

under the [Apache Public License version 2](https://github.com/basho/machi/tree/master/LICENSE).

|

||||

|

||||

<a name="n1.8">

|

||||

### 1.8. Where can I find the Machi source code and documentation? Can I contribute?

|

||||

|

||||

All Machi source code and documentation can be found at GitHub:

|

||||

[https://github.com/basho/machi](https://github.com/basho/machi).

|

||||

The full URL for this FAQ is [https://github.com/basho/machi/blob/master/FAQ.md](https://github.com/basho/machi/blob/master/FAQ.md).

|

||||

|

||||

There are several "README" files in the source repository. We hope

|

||||

they provide useful guidance for first-time readers.

|

||||

|

||||

If you're interested in contributing code or documentation or

|

||||

ideas for improvement, please see our contributing & collaboration

|

||||

guidelines at

|

||||

[https://github.com/basho/machi/blob/master/CONTRIBUTING.md](https://github.com/basho/machi/blob/master/CONTRIBUTING.md).

|

||||

|

||||

<a name="n1.9">

|

||||

### 1.9. What is Machi's expected release schedule, packaging, and operating system/OS distribution support?

|

||||

|

||||

Basho expects that Machi's first major product release will take place

|

||||

during the 2nd quarter of 2016.

|

||||

|

||||

Basho's official support for operating systems (e.g. Linux, FreeBSD),

|

||||

operating system packaging (e.g. CentOS rpm/yum package management,

|

||||

Ubuntu debian/apt-get package management), and

|

||||

container/virtualization have not yet been chosen. If you wish to

|

||||

provide your opinion, we'd love to hear it. Please

|

||||

[open a support ticket at GitHub](https://github.com/basho/machi/issues/new)

|

||||

and let us know.

|

||||

|

||||

<a name="n2">

|

||||

## 2. Questions about Machi relative to {{something else}}

|

||||

|

||||

<a name="better-than-hadoop">

|

||||

<a name="n2.1">

|

||||

### 2.1. How is Machi better than Hadoop?

|

||||

|

||||

This question is frequently asked by trolls. If this is a troll

|

||||

question, the answer is either, "Nothing is better than Hadoop," or

|

||||

else "Everything is better than Hadoop."

|

||||

|

||||

The real answer is that Machi is not a distributed data processing

|

||||

framework like Hadoop is.

|

||||

See [Hadoop's entry in Wikipedia](https://en.wikipedia.org/wiki/Apache_Hadoop)

|

||||

and focus on the description of Hadoop's MapReduce and YARN; Machi

|

||||

contains neither.

|

||||

|

||||

<a name="n2.2">

|

||||

### 2.2. How does Machi differ from HadoopFS/HDFS?

|

||||

|

||||

This is a much better question than the

|

||||

[How is Machi better than Hadoop?](#better-than-hadoop)

|

||||

question.

|

||||

|

||||

[HadoopFS's entry in Wikipedia](https://en.wikipedia.org/wiki/Apache_Hadoop#HDFS)

|

||||

|

||||

One way to look at Machi is to consider Machi as a distributed file

|

||||

store. HadoopFS is also a distributed file store. Let's compare and

|

||||

contrast.

|

||||

|

||||

<table>

|

||||

|

||||

<tr>

|

||||

<td> <b>Machi</b>

|

||||

<td> <b>HadoopFS (HDFS)</b>

|

||||

|

||||

<tr>

|

||||

<td> Not POSIX compliant

|

||||

<td> Not POSIX compliant

|

||||

|

||||

<tr>

|

||||

<td> Immutable file store with append-only semantics (simplifying

|

||||

things a little bit).

|

||||

<td> Immutable file store with append-only semantics

|

||||

|

||||

<tr>

|

||||

<td> File data may be read concurrently while file is being actively

|

||||

appended to.

|

||||

<td> File must be closed before a client can read it.

|

||||

|

||||

<tr>

|

||||

<td> No concept (yet) of users or authentication (though the initial

|

||||

supported release will support basic user + password authentication).

|

||||

Machi will probably never natively support directories or ACLs.

|

||||

<td> Has concepts of users, directories, and ACLs.

|

||||

|

||||

<tr>

|

||||

<td> Machi does not allow clients to name their own files or to specify data

|

||||

placement/offset within a file.

|

||||

<td> While not POSIX compliant, HDFS allows a fairly flexible API for

|

||||

managing file names and file writing position within a file (during a

|

||||

file's writable phase).

|

||||

|

||||

<tr>

|

||||

<td> Does not have any file distribution/partitioning/sharding across

|

||||

Machi chains: in a single Machi chain, all files are replicated by

|

||||

all servers in the chain. The "random slicing" technique is used

|

||||

to distribute/partition/shard files across multiple Machi clusters.

|

||||

<td> File distribution/partitioning/sharding is performed

|

||||

automatically by the HDFS "name node".

|

||||

|

||||

<tr>

|

||||

<td> Machi requires no central "name node" for single chain use or

|

||||

for multi-chain cluster use.

|

||||

<td> Requires a single "namenode" server to maintain file system contents

|

||||

and file content mapping. (May be deployed with a "secondary

|

||||

namenode" to reduce unavailability when the primary namenode fails.)

|

||||

|

||||

<tr>

|

||||

<td> Machi uses Chain Replication to manage all file replicas.

|

||||

<td> The HDFS name node uses an ad hoc mechanism for replicating file

|

||||

contents. The HDFS file system metadata (file names, file block(s)

|

||||

locations, ACLs, etc.) is stored by the name node in the local file

|

||||

system and is replicated to any secondary namenode using snapshots.

|

||||

|

||||

<tr>

|

||||

<td> Machi replicates files *N* ways where *N* is the length of the

|

||||

Chain Replication chain. Typically, *N=2*, but this is configurable.

|

||||

<td> HDFS typical replicates file contents *N=3* ways, but this is

|

||||

configurable.

|

||||

|

||||

<tr>

|

||||

<td> All Machi file data is protected by SHA-1 checksums generated by

|

||||

the client prior to writing by Machi servers.

|

||||

<td> Optional file checksum protection may be implemented on the

|

||||

server side.

|

||||

|

||||

</table>

|

||||

|

||||

<a name="n2.3">

|

||||

### 2.3. How does Machi differ from Kafka?

|

||||

|

||||

Machi is rather close to Kafka in spirit, though its implementation is

|

||||

quite different.

|

||||

|

||||

<table>

|

||||

|

||||

<tr>

|

||||

<td> <b>Machi</b>

|

||||

<td> <b>Kafka</b>

|

||||

|

||||

<tr>

|

||||

<td> Append-only, strongly consistent file store only

|

||||

<td> Append-only, strongly consistent log file store + additional

|

||||

services: for example, producer topics & sharding, consumer groups &

|

||||

failover, etc.

|

||||

|

||||

<tr>

|

||||

<td> Not yet code complete nor "battle tested" in large production

|

||||

environments.

|

||||

<td> "Battle tested" in large production environments.

|

||||

|

||||

<tr>

|

||||

<td> All Machi file data is protected by SHA-1 checksums generated by

|

||||

the client prior to writing by Machi servers.

|

||||

<td> Each log entry is protected by a 32 bit CRC checksum.

|

||||

|

||||

</table>

|

||||

|

||||

In theory, it should be "quite straightforward" to remove these parts

|

||||

of Kafka's code base:

|

||||

|

||||

* local file system I/O for all topic/partition/log files

|

||||

* leader/follower file replication, ISR ("In Sync Replica") state

|

||||

management, and related log file replication logic

|

||||

|

||||

... and replace those parts with Machi client API calls. Those parts

|

||||

of Kafka are what Machi has been designed to do from the very

|

||||

beginning.

|

||||

|

||||

See also:

|

||||

<a href="#corfu-and-tango">How does Machi differ from CORFU and Tango?</a>

|

||||

|

||||

<a name="n2.4">

|

||||

### 2.4. How does Machi differ from Bookkeeper?

|

||||

|

||||

Sorry, we haven't studied Bookkeeper very deeply or used Bookkeeper

|

||||

for any non-trivial project.

|

||||

|

||||

One notable limitation of the Bookkeeper API is that a ledger cannot

|

||||

be read by other clients until it has been closed. Any byte in a

|

||||

Machi file that has been written successfully may

|

||||

be read immedately by any other Machi client.

|

||||

|

||||

The name "Machi" does not have three consecutive pairs of repeating

|

||||

letters. The name "Bookkeeper" does.

|

||||

|

||||

<a name="corfu-and-tango">

|

||||

<a name="n2.5">

|

||||

### 2.5. How does Machi differ from CORFU and Tango?

|

||||

|

||||

Machi's design borrows very heavily from CORFU. We acknowledge a deep

|

||||

debt to the original Microsoft Research papers that describe CORFU's

|

||||

original design and implementation.

|

||||

|

||||

<table>

|

||||

|

||||

<tr>

|

||||

<td> <b>Machi</b>

|

||||

<td> <b>CORFU</b>

|

||||

|

||||

<tr>

|

||||

<td> Writes & reads may be on byte boundaries

|

||||

<td> Wries & reads must be on page boundaries, e.g. 4 or 8 KBytes, to

|

||||

align with server storage based on flash NVRAM/solid state disk (SSD).

|

||||

|

||||

<tr>

|

||||

<td> Provides multiple "logs", where each log has a name and is

|

||||

appended to & read from like a file. A read operation requires a 3-tuple:

|

||||

file name, starting byte offset, number of bytes.

|

||||

<td> Provides a single "log". A read operation requires only a

|

||||

1-tuple: the log page number. (A protocol option exists to

|

||||

request multiple pages in a single read query?)

|

||||

|

||||

<tr>

|

||||

<td> Offers service in either strongly consistent mode or eventually

|

||||

consistent mode.

|

||||

<td> Offers service in strongly consistent mode.

|

||||

|

||||

<tr>

|

||||

<td> May be deployed on solid state disk (SSD) or Winchester hard disks.

|

||||

<td> Designed for use with solid state disk (SSD) but can also be used

|

||||

with Winchester hard disks (with a performance penalty if used as

|

||||

suggested by use cases described by the CORFU papers).

|

||||

|

||||

<tr>

|

||||

<td> All Machi file data is protected by SHA-1 checksums generated by

|

||||

the client prior to writing by Machi servers.

|

||||

<td> Depending on server & flash device capabilities, each data page

|

||||

may be protected by a checksum (calculated independently by each

|

||||

server rather than the client).

|

||||

|

||||

</table>

|

||||

|

||||

See also: the "Recommended reading & related work" and "References"

|

||||

sections of the

|

||||

[Machi high level design doc](https://github.com/basho/machi/tree/master/doc/high-level-machi.pdf)

|

||||

for pointers to the MSR papers related to CORFU.

|

||||

|

||||

Machi does not implement Tango directly. (Not yet, at least.)

|

||||

However, there is a prototype implementation included in the Machi

|

||||

source tree. See

|

||||

[the prototype/tango source code directory](https://github.com/basho/machi/tree/master/prototype/tango)

|

||||

for details.

|

||||

|

||||

Also, it's worth adding that the original MSR code behind the research

|

||||

papers is now available at GitHub:

|

||||

[https://github.com/CorfuDB/CorfuDB](https://github.com/CorfuDB/CorfuDB).

|

||||

|

||||

<a name="n3">

|

||||

## 3. Machi's specifics

|

||||

|

||||

<a name="n3.1">

|

||||

### 3.1. What technique is used to replicate Machi's files? Can other techniques be used?

|

||||

|

||||

Machi uses Chain Replication to replicate all file data. Each byte of

|

||||

a file is stored using a "write-once register", which is a mechanism

|

||||

to enforce immutability after the byte has been written exactly once.

|

||||

|

||||

In order to ensure availability in the event of *F* failures, Chain

|

||||

Replication requires a minimum of *F + 1* servers to be configured.

|

||||

|

||||

Alternative mechanisms could be used to manage file replicas, such as

|

||||

Paxos or Raft. Both Paxos and Raft have some requirements that are

|

||||

difficult to adapt to Machi's design goals:

|

||||

|

||||

* Both protocols use quorum majority consensus, which requires a

|

||||

minimum of *2F + 1* working servers to tolerate *F* failures. For

|

||||

example, to tolerate 2 server failures, quorum majority protocols

|

||||

require a minimum of 5 servers. To tolerate the same number of

|

||||

failures, Chain Replication requires a minimum of only 3 servers.

|

||||

* Machi's use of "humming consensus" to manage internal server

|

||||

metadata state would also (probably) require conversion to Paxos or

|

||||

Raft. (Or "outsourced" to a service such as ZooKeeper.)

|

||||

|

||||

<a name="n3.2">

|

||||

### 3.2. Does Machi have a reliance on a coordination service such as ZooKeeper or etcd?

|

||||

|

||||

No. Machi maintains critical internal cluster information in an

|

||||

internal, immutable data service called the "projection store". The

|

||||

contents of the projection store are maintained by a new technique

|

||||

called "humming consensus".

|

||||

|

||||

Humming consensus is described in the

|

||||

[Machi chain manager high level design doc](https://github.com/basho/machi/tree/master/doc/high-level-chain-mgr.pdf).

|

||||

|

||||

<a name="n3.3">

|

||||

### 3.3. Are there any presentations available about Humming Consensus

|

||||

|

||||

Scott recently (November 2015) gave a presentation at the

|

||||

[RICON 2015 conference](http://ricon.io) about one of the techniques

|

||||

used by Machi; "Managing Chain Replication Metadata with

|

||||

Humming Consensus" is available online now.

|

||||

* [slides (PDF format)](http://ricon.io/speakers/slides/Scott_Fritchie_Ricon_2015.pdf)

|

||||

* [video](https://www.youtube.com/watch?v=yR5kHL1bu1Q)

|

||||

|

||||

<a name="n3.4">

|

||||

### 3.4. Is it true that there's an allegory written to describe Humming Consensus?

|

||||

|

||||

Yes. In homage to Leslie Lamport's original paper about the Paxos

|

||||

protocol, "The Part-time Parliamant", there is an allegorical story

|

||||

that describes humming consensus as method to coordinate

|

||||

many composers to write a single piece of music.

|

||||

The full story, full of wonder and mystery, is called

|

||||

["On “Humming Consensus”, an allegory"](http://www.snookles.com/slf-blog/2015/03/01/on-humming-consensus-an-allegory/).

|

||||

There is also a

|

||||

[short followup blog posting](http://www.snookles.com/slf-blog/2015/03/20/on-humming-consensus-an-allegory-part-2/).

|

||||

|

||||

<a name="n3.5">

|

||||

### 3.5. How is Machi tested?

|

||||

|

||||

While not formally proven yet, Machi's implementation of Chain

|

||||

Replication and of humming consensus have been extensively tested with

|

||||

several techniques:

|

||||

|

||||

* We use an executable model based on the QuickCheck framework for

|

||||

property based testing.

|

||||

* In addition to QuickCheck alone, we use the PULSE extension to

|

||||

QuickCheck is used to test the implementation

|

||||

under extremely skewed & unfair scheduling/timing conditions.

|

||||

|

||||

The model includes simulation of asymmetric network partitions. For

|

||||

example, actor A can send messages to actor B, but B cannot send

|

||||

messages to A. If such a partition happens somewhere in a traditional

|

||||

network stack (e.g. a faulty Ethernet cable), any TCP connection

|

||||

between A & B will quickly interrupt communication in _both_

|

||||

directions. In the Machi network partition simulator, network

|

||||

partitions can be truly one-way only.

|

||||

|

||||

After randomly generating a series of network partitions (which may

|

||||

change several times during any single test case) and a random series

|

||||

of cluster operations, an event trace of all cluster activity is used

|

||||

to verify that no safety-critical rules have been violated.

|

||||

|

||||

All test code is available in the [./test](./test) subdirectory.

|

||||

Modules that use QuickCheck will use a file suffix of `_eqc`, for

|

||||

example, [./test/machi_ap_repair_eqc.erl](./test/machi_ap_repair_eqc.erl).

|

||||

|

||||

<a name="n3.6">

|

||||

### 3.6. Does Machi require shared disk storage? e.g. iSCSI, NBD (Network Block Device), Fibre Channel disks

|

||||

|

||||

No, Machi's design assumes that each Machi server is a fully

|

||||

independent hardware and assumes only standard local disks (Winchester

|

||||

and/or SSD style) with local-only interfaces (e.g. SATA, SCSI, PCI) in

|

||||

each machine.

|

||||

|

||||

<a name="n3.7">

|

||||

### 3.7. Does Machi require or assume that servers with large numbers of disks must use RAID-0/1/5/6/10/50/60 to create a single block device?

|

||||

|

||||

No. When used with servers with multiple disks, the intent is to

|

||||

deploy multiple Machi servers per machine: one Machi server per disk.

|

||||

|

||||

* Pro: disk bandwidth and disk storage capacity can be managed at the

|

||||

level of an individual disk.

|

||||

* Pro: failure of an individual disk does not risk data loss on other

|

||||

disks.

|

||||

* Con (or pro, depending on the circumstances): in this configuration,

|

||||

Machi would require additional network bandwidth to repair data on a lost

|

||||

drive instead of intra-machine disk & bus & memory bandwidth that

|

||||

would be required for RAID volume repair

|

||||

* Con: replica placement policy, such as "rack awareness", becomes a

|

||||

larger problem that must be automated. For example, a problem of

|

||||

placement relative to 12 servers is smaller than a placement problem

|

||||

of managing 264 seprate disks (if each of 12 servers has 22 disks).

|

||||

|

||||

<a name="n3.8">

|

||||

### 3.8. What language(s) is Machi written in?

|

||||

|

||||

So far, Machi is written in Erlang, mostly. Machi uses at least one

|

||||

library, [ELevelDB](https://github.com/basho/eleveldb), that is

|

||||

implemented both in C++ and in Erlang, using Erlang NIFs (Native

|

||||

Interface Functions) to allow Erlang code to call C++ functions.

|

||||

|

||||

In the event that we encounter a performance problem that cannot be

|

||||

solved within the Erlang/OTP runtime environment, all of Machi's

|

||||

performance-critical components are small enough to be re-implemented

|

||||

in C, Java, or other "gotta go fast fast FAST!!" programming

|

||||

language. We expect that the Chain Replication manager and other

|

||||

critical "control plane" software will remain in Erlang.

|

||||

|

||||

<a name="n3.9">

|

||||

### 3.9. Can Machi run on Windows? Can Machi run on 32-bit platforms?

|

||||

|

||||

The ELevelDB NIF does not compile or run correctly on Erlang/OTP

|

||||

Windows platforms, nor does it compile correctly on 32-bit platforms.

|

||||

Machi should support all 64-bit UNIX-like platforms that are supported

|

||||

by Erlang/OTP and ELevelDB.

|

||||

|

||||

<a name="n3.10">

|

||||

### 3.10. Does Machi use the Erlang/OTP network distribution system (aka "disterl")?

|

||||

|

||||

No, Machi doesn't use Erlang/OTP's built-in distributed message

|

||||

passing system. The code would be *much* simpler if we did use

|

||||

"disterl". However, due to (premature?) worries about performance, we

|

||||

wanted to have the option of re-writing some Machi components in C or

|

||||

Java or Go or OCaml or COBOL or in-kernel assembly hexadecimal

|

||||

bit-twiddling magicSPEED ... without also having to find a replacement

|

||||

for disterl. (Or without having to re-invent disterl's features in

|

||||

another language.)

|

||||

|

||||

All wire protocols used by Machi are defined & implemented using

|

||||

[Protocol Buffers](https://developers.google.com/protocol-buffers/docs/overview).

|

||||

The definition file can be found at [./src/machi.proto](./src/machi.proto).

|

||||

|

||||

<a name="n3.11">

|

||||

### 3.11. Can I use HTTP to write/read stuff into/from Machi?

|

||||

|

||||

Short answer: No, not yet.

|

||||

|

||||

Longer answer: No, but it was possible as a hack, many months ago, see

|

||||

[primitive/hack'y HTTP interface that is described in this source code commit log](https://github.com/basho/machi/commit/6cebf397232cba8e63c5c9a0a8c02ba391b20fef).

|

||||

Please note that commit `6cebf397232cba8e63c5c9a0a8c02ba391b20fef` is

|

||||

required to try using this feature: the code has since bit-rotted and

|

||||

will not work on today's `master` branch.

|

||||

|

||||

In the long term, we'll probably want the option of an HTTP interface

|

||||

that is as well designed and REST'ful as possible. It's on the

|

||||

internal Basho roadmap. If you'd like to work on a real, not-kludgy

|

||||

HTTP interface to Machi,

|

||||

[please contact us!](https://github.com/basho/machi/blob/master/CONTRIBUTING.md)

|

||||

|

||||

297

INSTALLATION.md

|

|

@ -1,297 +0,0 @@

|

|||

|

||||

# Installation instructions for Machi

|

||||

|

||||

Machi is still a young enough project that there is no "installation".

|

||||

All development is still done using the Erlang/OTP interactive shell

|

||||

for experimentation, using `make` to compile, and the shell's

|

||||

`l(ModuleName).` command to reload any recompiled modules.

|

||||

|

||||

In the coming months (mid-2015), there are plans to create OS packages

|

||||

for common operating systems and OS distributions, such as FreeBSD and

|

||||

Linux. If building RPM, DEB, PKG, or other OS package managers is

|

||||

your specialty, we could use your help to speed up the process! <b>:-)</b>

|

||||

|

||||

## Development toolchain dependencies

|

||||

|

||||

Machi's dependencies on the developer's toolchain are quite small.

|

||||

|

||||

* Erlang/OTP version 17.0 or later, 32-bit or 64-bit

|

||||

* The `make` utility.

|

||||

* The GNU version of make is not required.

|

||||

* Machi is bundled with a `rebar` package and should be usable on

|

||||

any Erlang/OTP 17.x platform.

|

||||

|

||||

Machi does not use any Erlang NIF or port drivers.

|

||||

|

||||

## Development OS

|

||||

|

||||

At this time, Machi is 100% Erlang. Although we have not tested it,

|

||||

there should be no good reason why Machi cannot run on Erlang/OTP on

|

||||

Windows platforms. Machi has been developed on OS X and FreeBSD and

|

||||

is expected to work on any UNIX-ish platform supported by Erlang/OTP.

|

||||

|

||||

## Compiling the Machi source

|

||||

|

||||

First, clone the Machi source code, then compile it. You will

|

||||

need Erlang/OTP version 17.x to compile.

|

||||

|

||||

cd /some/nice/dev/place

|

||||

git clone https://github.com/basho/machi.git

|

||||

cd machi

|

||||

make

|

||||

make test

|

||||

|

||||

The unit test suite is based on the EUnit framework (bundled with

|

||||

Erlang/OTP 17). The `make test` suite runs on my MacBook in 10

|

||||

seconds or less.

|

||||

|

||||

## Setting up a Machi cluster

|

||||

|

||||

As noted above, everything is done manually at the moment. Here is a

|

||||

rough sketch of day-to-day development workflow.

|

||||

|

||||

### 1. Run the server

|

||||

|

||||

cd /some/nice/dev/place/machi

|

||||

make

|

||||

erl -pz ebin deps/*/ebin +A 253 +K true

|

||||

|

||||

This will start an Erlang shell, plus a few extras.

|

||||

|

||||

* Tell the OTP code loader where to find dependent BEAM files.

|

||||

* Set a large pool (253) of file I/O worker threads

|

||||

* Use a more efficient kernel polling mechanism for network sockets.

|

||||

* If your Erlang/OTP package does not support `+K true`, do not

|

||||

worry. It is an optional flag.

|

||||

|

||||

The following commands will start three Machi FLU server processes and

|

||||

then tell them to form a single chain. Internally, each FLU will have

|

||||

Erlang registered processes with the names `a`, `b`, and `c`, and

|

||||

listen on TCP ports 4444, 4445, and 4446, respectively. Each will use

|

||||

a data directory located in the current directory, e.g. `./data.a`.

|

||||

|

||||

Cut-and-paste the following commands into the CLI at the prompt:

|

||||

|

||||

application:ensure_all_started(machi).

|

||||

machi_flu_psup:start_flu_package(a, 4444, "./data.a", []).

|

||||

machi_flu_psup:start_flu_package(b, 4445, "./data.b", []).

|

||||

D = orddict:from_list([{a,{p_srvr,a,machi_flu1_client,"localhost",4444,[]}},{b,{p_srvr,b,machi_flu1_client,"localhost",4445,[]}}]).

|

||||

machi_chain_manager1:set_chain_members(a_chmgr, D).

|

||||

machi_chain_manager1:set_chain_members(b_chmgr, D).

|

||||

|

||||

If you change the TCP ports of any of the processes, you must make the

|

||||

same change both in the `machi_flu_psup:start_flu_package()` arguments

|

||||

and also in the `D` dictionary.

|

||||

|

||||

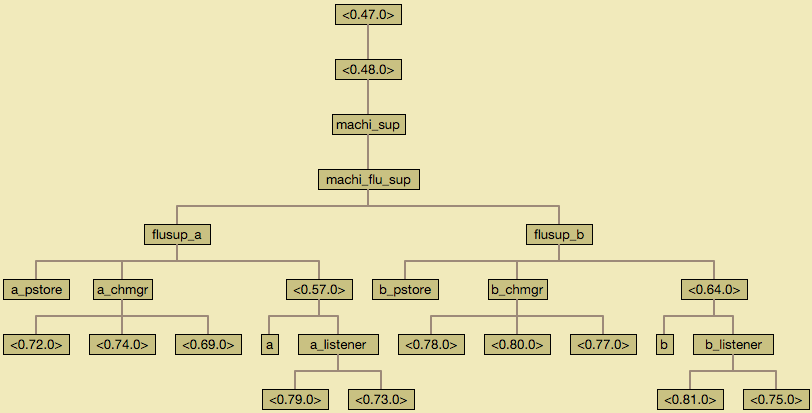

The Erlang processes that will be started are arranged in the

|

||||

following hierarchy. See the

|

||||

[machi_flu_psup.erl](http://basho.github.io/machi/edoc/machi_flu_psup.html)

|

||||

EDoc documentation for a description of each of these processes.

|

||||

|

||||

|

||||

|

||||

### 2. Check the status of the server processes.

|

||||

|

||||

Each Machi FLU is an independent file server. All replication between

|

||||

Machi servers is currently implemented by code on the *client* side.

|

||||

(This will change a bit later in 2015.)

|

||||

|

||||

Use the `read_latest_projection` command on the server CLI, e.g.:

|

||||

|

||||

rr("include/machi_projection.hrl").

|

||||

machi_projection_store:read_latest_projection(a_pstore, private).

|

||||

|

||||

... to query the projection store of the local FLU named `a`.

|

||||

|

||||

If you haven't looked at the server-side description of the various

|

||||

Machi server-side processes, please take a couple minutes to read

|

||||

[machi_flu_psup.erl](http://basho.github.io/machi/edoc/machi_flu_psup.html).

|

||||

|

||||

### 3. Use the machi_cr_client.erl client

|

||||

|

||||

For development work, I run the client & server on the same Erlang

|

||||

VM. It's just easier that way ... but the Machi client & server use

|

||||

TCP to communicate with each other.

|

||||

|

||||

If you are using a separate machine for the client, then compile the

|

||||

Machi source on the client machine. Then run:

|

||||

|

||||

cd /some/nice/dev/place/machi

|

||||

make

|

||||

erl -pz ebin deps/*/ebin

|

||||

|

||||

(You can add `+K true` if you wish ... but for light development work,

|

||||

it doesn't make a big difference.)

|

||||

|

||||

At the CLI, define the dictionary that describes the host & TCP port

|

||||

location for each of the Machi servers. (If you changed the host

|

||||

and/or TCP port values when starting the servers, then place make the

|

||||

same changes here.

|

||||

|

||||

D = orddict:from_list([{a,{p_srvr,a,machi_flu1_client,"localhost",4444,[]}},{b,{p_srvr,b,machi_flu1_client,"localhost",4445,[]}}]).

|

||||

|

||||

Then start a `machi_cr_client` client process.

|

||||

|

||||

{ok, C1} = machi_cr_client:start_link([P || {_,P} <- orddict:to_list(D)]).

|

||||

|

||||

Please keep in mind that this process is **linked** to your CLI

|

||||

process. If you run a CLI command the throws an exception/exits, then

|

||||

this `C1` process will also die! You can start a new one, using a

|

||||

different name, e.g. `C2`. Or you can start a new one by first

|

||||

"forgetting" the CLI's binding for `C1`.

|

||||

|

||||

f(C1).

|

||||

{ok, C1} = machi_cr_client:start_link([P || {_,P} <- orddict:to_list(D)]).

|

||||

|

||||

Now, append a small chunk of data to a file with the prefix

|

||||

`<<"pre">>`.

|

||||

|

||||

12> {ok, C1} = machi_cr_client:start_link([P || {_,P} <- orddict:to_list(D)]).

|

||||

{ok,<0.112.0>}

|

||||

|

||||

13> machi_cr_client:append_chunk(C1, <<"pre">>, <<"Hello, world">>).

|

||||

{ok,{1024,12,<<"pre.G6C116EA.3">>}}

|

||||

|

||||

|

||||

This chunk was written successfully to a file called

|

||||

`<<"pre.5BBL16EA.1">>` at byte offset 1024. Let's fetch it now. And

|

||||

let's see what happens in a couple of error conditions: fetching

|

||||

bytes that "straddle" the end of file, bytes that are after the known

|

||||

end of file, and bytes from a file that has never been written.

|

||||

|

||||

26> machi_cr_client:read_chunk(C1, <<"pre.G6C116EA.3">>, 1024, 12).

|

||||

{ok,<<"Hello, world">>}

|

||||

|

||||

27> machi_cr_client:read_chunk(C1, <<"pre.G6C116EA.3">>, 1024, 777).

|

||||

{error,partial_read}

|

||||

|

||||

28> machi_cr_client:read_chunk(C1, <<"pre.G6C116EA.3">>, 889323, 12).

|

||||

{error,not_written}

|

||||

|

||||

29> machi_cr_client:read_chunk(C1, <<"no-such-file">>, 1024, 12).

|

||||

{error,not_written}

|

||||

|

||||

### 4. Use the `machi_proxy_flu1_client.erl` client

|

||||

|

||||

The `machi_proxy_flu1_client` module implements a simpler client that

|

||||

only uses a single Machi FLU file server. This client is **not**

|

||||

aware of chain replication in any way.

|

||||

|

||||

Let's use this client to verify that the `<<"Hello, world!">> data

|

||||

that we wrote in step #3 was truly written to both FLU servers by the

|

||||

`machi_cr_client` library. We start proxy processes for each of the

|

||||

FLUs, then we'll query each ... but first we also need to ask (at

|

||||

least one of) the servers for the current Machi cluster's Epoch ID.

|

||||

|

||||

{ok, Pa} = machi_proxy_flu1_client:start_link(orddict:fetch(a, D)).

|

||||

{ok, Pb} = machi_proxy_flu1_client:start_link(orddict:fetch(b, D)).

|

||||

{ok, EpochID0} = machi_proxy_flu1_client:get_epoch_id(Pa).

|

||||

machi_proxy_flu1_client:read_chunk(Pa, EpochID0, <<"pre.G6C116EA.3">>, 1024, 12).

|

||||

machi_proxy_flu1_client:read_chunk(Pb, EpochID0, <<"pre.G6C116EA.3">>, 1024, 12).

|

||||

|

||||

### 5. Checking how Chain Replication "read repair" works

|

||||

|

||||

Now, let's cause some trouble: we will write some data only to the

|

||||

head of the chain. By default, all read operations go to the tail of

|

||||

the chain. But, if a value is not written at the tail, then "read

|

||||

repair" ought to verify:

|

||||

|

||||

* Perhaps the value truly is not written at any server in the chain.

|

||||

* Perhaps the value was partially written, i.e. by a buggy or

|

||||

crashed-in-the-middle-of-the-writing-procedure client.

|

||||

|

||||

So, first, let's double-check that the chain is in the order that we

|

||||

expect it to be.

|

||||

|

||||

rr("include/machi_projection.hrl"). % In case you didn't do this earlier.

|

||||

machi_proxy_flu1_client:read_latest_projection(Pa, private).

|

||||

|

||||

The part of the `#projection_v1` record that we're interested in is

|

||||

the `upi`. This is the list of servers that preserve the Update

|

||||

Propagation Invariant property of the Chain Replication algorithm.

|

||||

The output should look something like:

|

||||

|

||||

{ok,#projection_v1{

|

||||

epoch_number = 1119,

|

||||

[...]

|

||||

author_server = b,

|

||||

all_members = [a,b],

|

||||

creation_time = {1432,189599,85392},

|

||||

mode = ap_mode,

|

||||

upi = [a,b],

|

||||

repairing = [],down = [],

|

||||

[...]

|

||||

}

|

||||

|

||||

So, we see `upi=[a,b]`, which means that FLU `a` is the head of the

|

||||

chain and that `b` is the tail.

|

||||

|

||||

Let's append to `a` using the `machi_proxy_flu1_client` to the head

|

||||

and then read from both the head and tail. (If your chain order is

|

||||

different, then please exchange `Pa` and `Pb` in all of the commands

|

||||

below.)

|

||||

|

||||

16> {ok, {Off1,Size1,File1}} = machi_proxy_flu1_client:append_chunk(Pa, EpochID0, <<"foo">>, <<"Hi, again">>).

|

||||

{ok,{1024,9,<<"foo.K63D16M4.1">>}}

|

||||

|

||||

17> machi_proxy_flu1_client:read_chunk(Pa, EpochID0, File1, Off1, Size1). {ok,<<"Hi, again">>}

|

||||

|

||||

18> machi_proxy_flu1_client:read_chunk(Pb, EpochID0, File1, Off1, Size1).

|

||||

{error,not_written}

|

||||

|

||||

That is correct! Now, let's read the same file & offset using the

|

||||

client that understands chain replication. Then we will try reading

|

||||

directly from FLU `b` again ... we should see something different.

|

||||

|

||||

19> {ok, C2} = machi_cr_client:start_link([P || {_,P} <- orddict:to_list(D)]).

|

||||

{ok,<0.113.0>}

|

||||

|

||||

20> machi_cr_client:read_chunk(C2, File1, Off1, Size1).

|

||||

{ok,<<"Hi, again">>}

|

||||

|

||||

21> machi_proxy_flu1_client:read_chunk(Pb, EpochID0, File1, Off1, Size1).

|

||||

{ok,<<"Hi, again">>}

|

||||

|

||||

That is correct! The command at prompt #20 automatically performed

|

||||

"read repair" on FLU `b`.

|

||||

|

||||

### 6. Exploring what happens when a server is stopped, data written, and server restarted

|

||||

|

||||

Cut-and-paste the following into your CLI. We assume that your CLI

|

||||

still remembers the value of the `D` dictionary from the previous

|

||||

steps above. We will stop FLU `a`, write one thousand small

|

||||

chunks, then restart FLU `a`, then see what happens.

|

||||

|

||||

{ok, C3} = machi_cr_client:start_link([P || {_,P} <- orddict:to_list(D)]).

|

||||

machi_flu_psup:stop_flu_package(a).

|

||||

[machi_cr_client:append_chunk(C3, <<"foo">>, <<"Lots of stuff">>) || _ <- lists:seq(1,1000)].

|

||||

machi_flu_psup:start_flu_package(a, 4444, "./data.a", []).

|

||||

|

||||

About 10 seconds after we restarting `a` with the

|

||||

`machi_flu_psup:start_flu_package()` function, this appears on the

|

||||

console:

|

||||

|

||||

=INFO REPORT==== 21-May-2015::15:53:46 ===

|

||||

Repair start: tail b of [b] -> [a], ap_mode ID {b,{1432,191226,707262}}

|

||||

MissingFileSummary [{<<"foo.CYLJ16ZT.1">>,{14024,[a]}}]

|

||||

Make repair directives: . done

|

||||

Out-of-sync data for FLU a: 0.1 MBytes

|

||||

Out-of-sync data for FLU b: 0.0 MBytes

|

||||

Execute repair directives: .......... done

|

||||

|

||||

=INFO REPORT==== 21-May-2015::15:53:47 ===

|

||||

Repair success: tail b of [b] finished ap_mode repair ID {b,{1432,191226,707262}}: ok

|

||||

Stats [{t_in_files,0},{t_in_chunks,1000},{t_in_bytes,13000},{t_out_files,0},{t_out_chunks,1000},{t_out_bytes,13000},{t_bad_chunks,0},{t_elapsed_seconds,0.647}]

|

||||

|

||||

The data repair process, executed by `b`'s chain manager, found 1000

|

||||

chunks that were out of sync and copied them to `a` successfully.

|

||||

|

||||

### 7. Exploring the rest of the client APIs

|

||||

|

||||

Please see the EDoc documentation for the client APIs. Feel free to

|

||||

explore!

|

||||

|

||||

* [Erlang type definitions for the client APIs](http://basho.github.io/machi/edoc/machi_flu1_client.html)

|

||||

* [EDoc for machi_cr_client.erl](http://basho.github.io/machi/edoc/machi_cr_client.html)

|

||||

* [EDoc for machi_proxy_flu1_client.erl](http://basho.github.io/machi/edoc/machi_proxy_flu1_client.html)

|

||||

* [Top level EDoc collection](http://basho.github.io/machi/edoc/)

|

||||

178

LICENSE

|

|

@ -1,178 +0,0 @@

|

|||

|

||||

Apache License

|

||||

Version 2.0, January 2004

|

||||

http://www.apache.org/licenses/

|

||||

|

||||

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

||||

|

||||

1. Definitions.

|

||||

|

||||

"License" shall mean the terms and conditions for use, reproduction,

|

||||

and distribution as defined by Sections 1 through 9 of this document.

|

||||

|

||||

"Licensor" shall mean the copyright owner or entity authorized by

|

||||

the copyright owner that is granting the License.

|

||||

|

||||

"Legal Entity" shall mean the union of the acting entity and all

|

||||

other entities that control, are controlled by, or are under common

|

||||

control with that entity. For the purposes of this definition,

|

||||

"control" means (i) the power, direct or indirect, to cause the

|

||||

direction or management of such entity, whether by contract or

|

||||

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

||||

outstanding shares, or (iii) beneficial ownership of such entity.

|

||||

|

||||

"You" (or "Your") shall mean an individual or Legal Entity

|

||||

exercising permissions granted by this License.

|

||||

|

||||

"Source" form shall mean the preferred form for making modifications,

|

||||

including but not limited to software source code, documentation

|

||||

source, and configuration files.

|

||||

|

||||

"Object" form shall mean any form resulting from mechanical

|

||||

transformation or translation of a Source form, including but

|

||||

not limited to compiled object code, generated documentation,

|

||||

and conversions to other media types.

|

||||

|

||||

"Work" shall mean the work of authorship, whether in Source or

|

||||

Object form, made available under the License, as indicated by a

|

||||

copyright notice that is included in or attached to the work

|

||||

(an example is provided in the Appendix below).

|

||||

|

||||

"Derivative Works" shall mean any work, whether in Source or Object

|

||||

form, that is based on (or derived from) the Work and for which the

|

||||

editorial revisions, annotations, elaborations, or other modifications

|

||||

represent, as a whole, an original work of authorship. For the purposes

|

||||

of this License, Derivative Works shall not include works that remain

|

||||

separable from, or merely link (or bind by name) to the interfaces of,

|

||||

the Work and Derivative Works thereof.

|

||||

|

||||

"Contribution" shall mean any work of authorship, including

|

||||

the original version of the Work and any modifications or additions

|

||||

to that Work or Derivative Works thereof, that is intentionally

|

||||

submitted to Licensor for inclusion in the Work by the copyright owner

|

||||

or by an individual or Legal Entity authorized to submit on behalf of

|

||||

the copyright owner. For the purposes of this definition, "submitted"

|

||||

means any form of electronic, verbal, or written communication sent

|

||||

to the Licensor or its representatives, including but not limited to

|

||||

communication on electronic mailing lists, source code control systems,

|

||||

and issue tracking systems that are managed by, or on behalf of, the

|

||||

Licensor for the purpose of discussing and improving the Work, but

|

||||

excluding communication that is conspicuously marked or otherwise

|

||||

designated in writing by the copyright owner as "Not a Contribution."

|

||||

|

||||

"Contributor" shall mean Licensor and any individual or Legal Entity

|

||||

on behalf of whom a Contribution has been received by Licensor and

|

||||

subsequently incorporated within the Work.

|

||||

|

||||

2. Grant of Copyright License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

copyright license to reproduce, prepare Derivative Works of,

|

||||

publicly display, publicly perform, sublicense, and distribute the

|

||||

Work and such Derivative Works in Source or Object form.

|

||||

|

||||

3. Grant of Patent License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

(except as stated in this section) patent license to make, have made,

|

||||

use, offer to sell, sell, import, and otherwise transfer the Work,

|

||||

where such license applies only to those patent claims licensable

|

||||

by such Contributor that are necessarily infringed by their

|

||||

Contribution(s) alone or by combination of their Contribution(s)

|

||||

with the Work to which such Contribution(s) was submitted. If You

|

||||

institute patent litigation against any entity (including a

|

||||

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

||||

or a Contribution incorporated within the Work constitutes direct

|

||||

or contributory patent infringement, then any patent licenses

|

||||

granted to You under this License for that Work shall terminate

|

||||

as of the date such litigation is filed.

|

||||

|

||||

4. Redistribution. You may reproduce and distribute copies of the

|

||||

Work or Derivative Works thereof in any medium, with or without

|

||||

modifications, and in Source or Object form, provided that You

|

||||

meet the following conditions:

|

||||

|

||||

(a) You must give any other recipients of the Work or

|

||||

Derivative Works a copy of this License; and

|

||||

|

||||

(b) You must cause any modified files to carry prominent notices

|

||||

stating that You changed the files; and

|

||||

|

||||

(c) You must retain, in the Source form of any Derivative Works

|

||||

that You distribute, all copyright, patent, trademark, and

|

||||

attribution notices from the Source form of the Work,

|

||||

excluding those notices that do not pertain to any part of

|

||||

the Derivative Works; and

|

||||

|

||||

(d) If the Work includes a "NOTICE" text file as part of its

|

||||

distribution, then any Derivative Works that You distribute must

|

||||

include a readable copy of the attribution notices contained

|

||||

within such NOTICE file, excluding those notices that do not

|

||||

pertain to any part of the Derivative Works, in at least one

|

||||

of the following places: within a NOTICE text file distributed

|

||||

as part of the Derivative Works; within the Source form or

|

||||

documentation, if provided along with the Derivative Works; or,

|

||||

within a display generated by the Derivative Works, if and

|

||||

wherever such third-party notices normally appear. The contents

|

||||

of the NOTICE file are for informational purposes only and

|

||||

do not modify the License. You may add Your own attribution

|

||||

notices within Derivative Works that You distribute, alongside

|

||||

or as an addendum to the NOTICE text from the Work, provided

|

||||

that such additional attribution notices cannot be construed

|

||||

as modifying the License.

|

||||

|

||||

You may add Your own copyright statement to Your modifications and

|

||||

may provide additional or different license terms and conditions

|

||||

for use, reproduction, or distribution of Your modifications, or

|

||||

for any such Derivative Works as a whole, provided Your use,

|

||||

reproduction, and distribution of the Work otherwise complies with

|

||||

the conditions stated in this License.

|

||||

|

||||

5. Submission of Contributions. Unless You explicitly state otherwise,

|

||||

any Contribution intentionally submitted for inclusion in the Work

|

||||

by You to the Licensor shall be under the terms and conditions of

|

||||

this License, without any additional terms or conditions.

|

||||

Notwithstanding the above, nothing herein shall supersede or modify

|

||||

the terms of any separate license agreement you may have executed

|

||||

with Licensor regarding such Contributions.

|

||||

|

||||

6. Trademarks. This License does not grant permission to use the trade

|

||||

names, trademarks, service marks, or product names of the Licensor,

|

||||

except as required for reasonable and customary use in describing the

|

||||

origin of the Work and reproducing the content of the NOTICE file.

|

||||

|

||||

7. Disclaimer of Warranty. Unless required by applicable law or

|

||||

agreed to in writing, Licensor provides the Work (and each

|

||||

Contributor provides its Contributions) on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

||||

implied, including, without limitation, any warranties or conditions

|

||||

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

||||

PARTICULAR PURPOSE. You are solely responsible for determining the

|

||||

appropriateness of using or redistributing the Work and assume any

|

||||

risks associated with Your exercise of permissions under this License.

|

||||

|

||||

8. Limitation of Liability. In no event and under no legal theory,

|

||||

whether in tort (including negligence), contract, or otherwise,

|

||||